In today’s digital landscape, traditional cloud computing architectures face numerous challenges. The centralized nature of cloud computing often results in high latency, limited scalability, and potential security concerns. However, there is a rising star on the horizon—edge computing.

This innovative approach brings computation as well as data storage closer to relevant devices, addressing the limitations of traditional cloud computing architectures. By harnessing the power of this computing approach, organizations can unlock real-time data processing capabilities, revolutionizing the way we interact with technology and enabling a wide range of applications.

Understanding Edge Computing

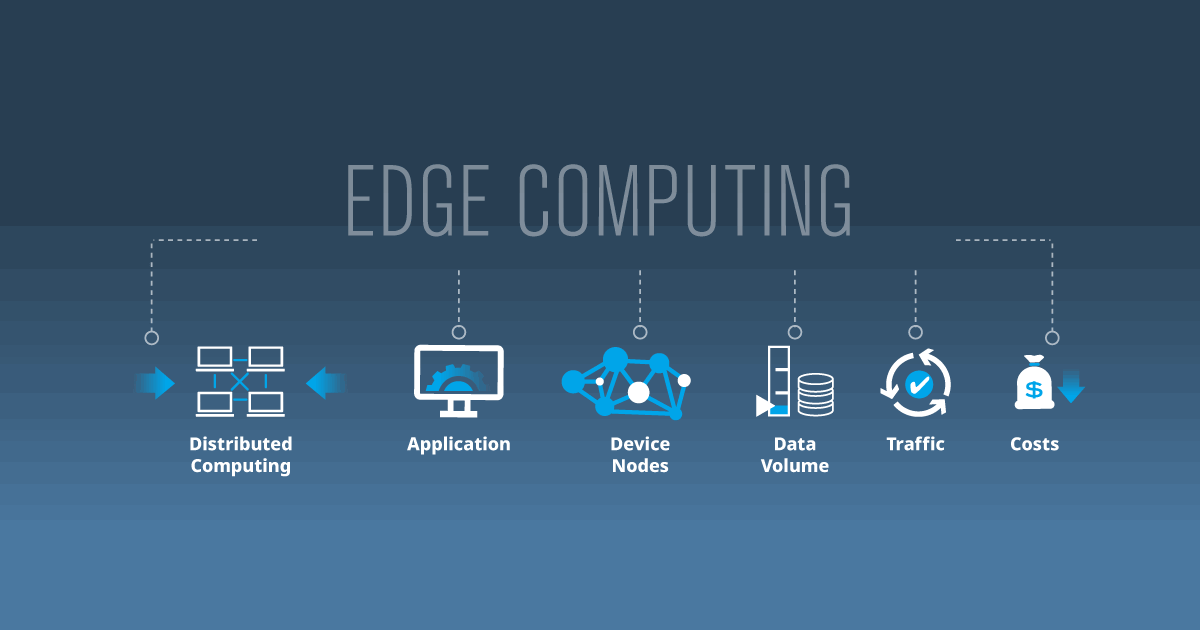

Edge computing can be defined as a decentralized computing paradigm that brings processing power and data storage closer to the edge tools and devices and sensors, reducing the distance data needs to travel to reach the cloud.

Instead of relying solely on a central data center, edge computing distributes computational resources across a network of edge tools and devices, such as routers, gateways, and IoT connected devices. This shift in architecture enables faster response times, lower latency, and improved efficiency.

Bringing computation and data storage closer to the edge devices

With edge computing, computation, and data storage occur closer to where the data is generated or needed. This proximity allows for real-time processing and analysis, minimizing the need for data to traverse long distances to reach the cloud.

Edge computing devices can perform local computations, filtering, and preprocessing data before selectively forwarding it to the cloud for further analysis or storage. This distributed approach helps alleviate the burden on centralized data centers and enhances the overall system performance.

Reducing latency and enabling faster response times

Latency, the delay between data transfer and response, is a critical factor in many applications. Edge computing reduces latency by processing all data at or near the source, enabling faster response times. For example, in autonomous vehicles, real-time decision-making is crucial for ensuring safety.

By leveraging this computing approach, vehicles can analyze sensor data locally, making split-second decisions without waiting for instructions from a distant cloud server. This near-instantaneous response time greatly enhances the reliability and effectiveness of real-time applications.

The distinction between edge AI and cloud computing

While both edge computing and cloud computing are essential components of the modern computing landscape, they serve distinct purposes. Cloud computing focuses on centralized processing, storage, and delivery of data, whereas edge computing brings computation and data storage closer to edge tools and devices.

Edge AI specifically refers to the integration of AI algorithms and capabilities at the edge, enabling real-time decision-making without relying on cloud connectivity.

Advantages of Edge Computing and Edge AI

Reduced latency for improved user experiences: One of the primary advantages of edge computing and Edge AI is reduced latency. By processing each data locally, near the edge computing devices, latency is minimized, resulting in faster response times and improved user experiences. Applications that require real-time interactions, such as video streaming, online gaming, and teleconferencing, greatly benefit from reduced latency offered by edge computing.

Enhanced data privacy and security: Edge computing enhances data privacy and security by minimizing the need to transmit sensitive data to the cloud. With edge computing, data can be processed and analyzed locally, reducing the exposure of sensitive information to potential security risks during transmission. This approach ensures greater control and confidentiality, particularly for applications dealing with personal or confidential data.

Enhanced intelligence and flexibility of AI applications: Edge AI brings intelligence and flexibility directly to edge computing devices. By deploying AI algorithms at the edge, devices can make autonomous decisions in real time without relying on continuous connectivity to the cloud. This capability enables edge devices to function even in environments with limited or intermittent network connectivity, making them more resilient and adaptable.

Real-time insights and faster response times: Edge AI enables real-time insights and faster response times by analyzing data locally at the edge. In time-critical scenarios, such as real-time monitoring, autonomous vehicles, or predictive maintenance, the ability to process data and make decisions at the edge is invaluable. Edge AI empowers devices to react swiftly to changing conditions, leading to improved operational efficiency and timely interventions.

Reduced cost and bandwidth requirements: Edge computing and Edge AI can significantly reduce costs associated with data transmission and cloud processing. By performing data processing at the edge, the amount of data transmitted to the cloud is reduced, resulting in cost savings on bandwidth and cloud infrastructure. Additionally, edge devices can operate with limited computational resources, reducing the need for expensive infrastructure and minimizing operational costs.

Increased privacy and data security: Edge computing offers increased privacy and data security by minimizing data exposure to external networks. Processing data locally reduces the risk of unauthorized access or interception during data transmission. This enhanced security is especially crucial for applications handling sensitive data, such as healthcare, finance, and government sectors.

High availability and reliability of AI applications: Edge AI applications can achieve high availability and reliability by operating locally at the edge. With edge computing, devices can continue to function even in situations where network connectivity is compromised or intermittent. By relying on local resources, Edge AI applications ensure uninterrupted operation, making them suitable for critical applications where downtime is not an option.

Continuous improvement and training of AI models at the edge: Edge AI allows for continuous improvement and training of AI models directly at the edge devices. By leveraging local computational resources, devices can update and refine their AI models based on real-time data. This capability enables edge devices to adapt to changing conditions, improve accuracy, and deliver better performance without relying on frequent cloud updates.

Enabling Real-Time Applications

Instant analysis and real-time decision-making: One of the key advantages of edge computing and Edge AI is the ability to perform instant analysis and real-time decision-making. Applications that require immediate responses, such as autonomous vehicles, industrial automation, and emergency response systems, can benefit from the low latency and fast processing capabilities of edge devices. By analyzing data at the edge, decisions can be made instantaneously, leading to improved efficiency and safety.

Use cases in smart cities, remote surgery, virtual reality gaming, etc.: Edge computing and Edge AI find numerous use cases across various industries. In smart cities, edge devices can monitor and optimize traffic flow, manage energy consumption, and enhance public safety through real-time analysis of video feeds and sensor data.

In healthcare, edge devices can enable remote surgery, monitor patient vitals in real time, and provide personalized treatment recommendations. Virtual reality gaming can also leverage edge computing for real-time rendering and reduced latency, enhancing the immersive experience for gamers.

Facilitating offline functionality in resource-constrained environments: Edge computing enables offline functionality in resource-constrained environments. In scenarios where consistent network connectivity is not available, edge devices can continue to operate and provide valuable services without relying on the cloud. For example, in remote areas or disaster-stricken regions, edge devices equipped with Edge AI capabilities can still perform critical functions, such as collecting and analyzing data, even in the absence of network connectivity.

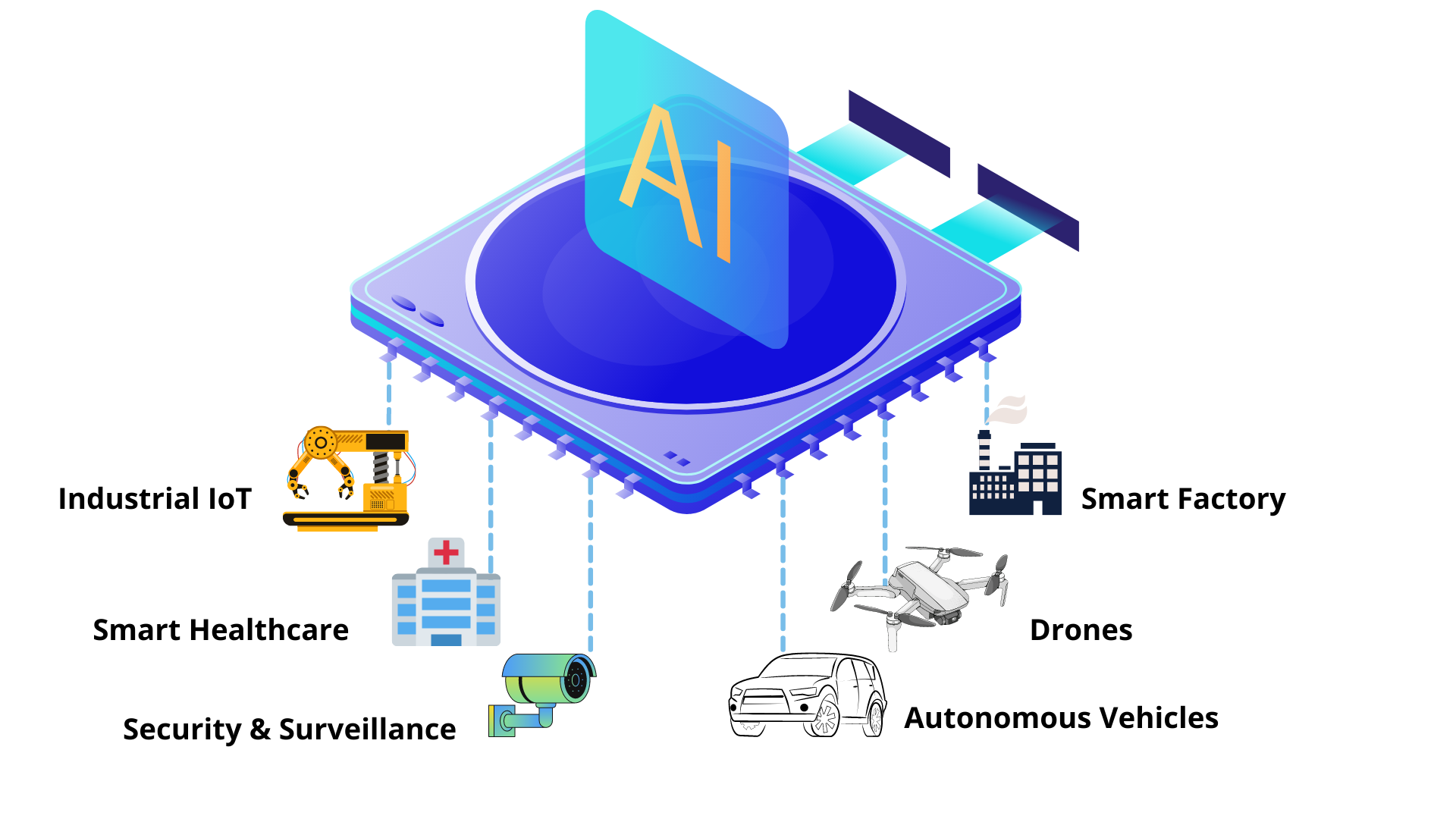

Use Cases and Examples of Edge AI

Edge AI has a wide range of use cases across various industries. In the energy sector, intelligent forecasting algorithms can be deployed at the edge to optimize energy distribution and minimize power outages. In manufacturing, predictive maintenance models running on edge tools and devices can proactively identify machine failures and schedule maintenance, reducing downtime and improving productivity.

In healthcare, AI-powered instruments can analyze patient data in real time, providing timely insights for diagnosis and treatment. Retail can benefit from smart virtual assistants deployed at the edge, offering personalized recommendations and enhancing the customer shopping experience.

Edge AI: Expanding Intelligence at the Edge

Edge AI combines the power of artificial intelligence and machine learning algorithms with edge computing. It enables intelligent decision-making and data processing directly on edge AI devices, without relying on cloud connectivity.

Advantages of Edge AI

Edge AI offers several advantages in real-time applications. It allows for instant analysis and decision-making at the edge, minimizing reliance on cloud services. Additionally, Edge AI enables reduced latency, improved privacy, and enhanced reliability by processing all data locally.

Use Cases and Applications of Edge AI

The applications of Edge AI are vast and diverse. In healthcare, Edge AI facilitates real-time patient monitoring, anomaly detection, and personalized treatment recommendations. In manufacturing, Edge AI enables predictive maintenance, quality control, and real-time analytics. Edge AI also plays a crucial role in intelligent transportation systems, autonomous vehicles, and personalized recommendations in retail.

Challenges and Considerations in Edge AI Deployments

Deploying Edge AI systems comes with its own set of challenges. Limited computational resources and power constraints at the edge must be carefully managed. Model optimization and compression techniques are required to ensure efficient inference on edge AI devices. Additionally, ensuring data consistency and synchronization between edge and cloud AI systems can be complex.

Overcoming Challenges

Managing a vast network of edge tools and devices requires robust orchestration and management systems. Organizations need efficient tools to deploy, monitor, and update edge computing devices seamlessly.

Robust orchestration and management systems for edge devices

These management systems should enable centralized control while also providing flexibility for local decision-making. Additionally, efficient resource allocation and workload balancing mechanisms are essential to ensure optimal performance and scalability across the edge infrastructure.

Optimizing resource allocation and balancing workloads

Resource allocation and workload balancing are critical factors in edge computing. As the number of edge computing devices and applications grows, efficient resource allocation becomes crucial to avoid bottlenecks and maximize performance.

Load balancing algorithms need to distribute computational tasks evenly across edge computing devices, ensuring efficient utilization of available resources. Moreover, intelligent resource allocation and workload balancing contribute to energy efficiency and cost optimization in these computing environments.

Ensuring data consistency and synchronization across edge and cloud

Data consistency and synchronization are fundamental challenges in distributed computing environments. In edge computing, where data is processed and stored across a network of devices, ensuring consistency and synchronization becomes even more complex.

Collaboration and Knowledge Sharing

Facilitating collaboration between data scientists and IT/operations teams

Effective collaboration between data scientists and IT/operations teams is essential for the successful implementation of edge computing projects. Data scientists bring expertise in data analysis, machine learning, and algorithm development, while IT/operations teams possess domain knowledge and infrastructure management skills. By fostering collaboration and knowledge sharing, organizations can leverage the collective expertise of both teams, ensuring the development and deployment of efficient computing solutions.

Establishing knowledge-sharing practices and documentation

Knowledge-sharing practices and documentation play a crucial role in enabling effective collaboration and fostering innovation in Edge AI. By establishing clear communication channels and documenting best practices, organizations can ensure that knowledge is shared, lessons learned are captured, and expertise is disseminated across teams. This facilitates continuous improvement, accelerates deployment cycles, and reduces implementation challenges.

Leveraging shared repositories and model registries

Shared repositories and model registries serve as centralized repositories for storing and sharing AI models and associated artifacts. These repositories enable teams to collaborate, track model versions, and promote reusability. By leveraging shared repositories and model registries, organizations can streamline model development and deployment processes, ensuring consistency and reliability across edge AI deployments.

Encouraging cross-functional training and skill development

Cross-functional training and skill development are essential for fostering a culture of innovation and expertise in Edge AI. By encouraging data scientists to gain a deeper understanding of edge computing principles and IT/operations teams to familiarize themselves with AI concepts, organizations can bridge the gap between disciplines. This cross-pollination of knowledge leads to more effective collaboration, better decision-making, and accelerated deployment of Edge AI solutions.

The Future of Edge Computing and Edge AI

Advancements with 5G networks for enhanced capabilities

The evolution of 5G networks holds tremendous potential for the future of edge computing and Edge AI. 5G networks offer significantly higher data transfer speeds, lower latency, and increased network capacity. These advancements enable even more real-time applications, such as autonomous vehicles, smart cities, and augmented reality, by providing the necessary infrastructure to handle the massive volumes of data generated and processed at the edge.

Integration of artificial intelligence and machine learning

The integration of artificial intelligence and machine learning will continue to shape the future of edge computing and Edge AI. As AI algorithms become more sophisticated and capable of running efficiently on resource-constrained edge computing devices, the range of applications and use cases will expand. Intelligent edge tools and devices will be able to adapt and learn from their environment, improving their decision-making capabilities and enhancing user experiences.

Convergence with blockchain and IoT for decentralized applications

The convergence of edge computing, Edge AI, blockchain, and the Internet of Things (IoT) will lead to the emergence of decentralized applications. By combining the secure and transparent nature of blockchain with the real-time processing capabilities of edge computing and Edge AI, decentralized applications can offer enhanced privacy, security, and autonomy. Industries such as supply chain management, finance, and healthcare can benefit from the traceability, trust, and decentralized decision-making offered by this convergence.

The proliferation of IoT devices and advancements in parallel computation and 5G

The proliferation of IoT devices, coupled with advancements in parallel computation and 5G networks, will fuel the growth of edge computing and Edge AI. As the number of IoT devices continues to increase, the demand for localized data processing and real-time decision-making will surge.

Parallel computation techniques, combined with the capabilities of 5G networks, will enable the processing and analysis of massive amounts of data generated by IoT devices at the edge, leading to improved efficiency, reduced latency, and enhanced user experiences.

Opportunities for enterprises to leverage edge AI for real-time insights, cost reduction, and increased privacy

The future of edge computing and Edge AI presents significant opportunities for enterprises. Organizations can leverage edge AI to gain real-time insights from their data, enabling them to make faster, data-driven decisions. Edge AI also offers cost reduction potential by minimizing data transfer costs, optimizing resource utilization, and reducing reliance on cloud infrastructure. Furthermore, edge AI enhances data privacy by processing sensitive data locally, offering organizations greater control over their data and reducing privacy concerns.

Conclusion

In the digital era, embracing the power of distributed computing at the edge is a strategic move for organizations looking to leverage real-time data processing capabilities. By overcoming the challenges of traditional cloud computing architectures, this innovative approach offers benefits such as reduced latency, enhanced data privacy and security, and optimized network bandwidth requirements.

With its ability to enable real-time applications and support offline functionality, this decentralized computing paradigm finds applications in diverse industries such as healthcare, manufacturing, transportation, retail, and more. Successful implementation of this technology relies on collaboration, knowledge sharing, and skill development among teams.

Looking ahead, advancements in 5G networks, integration with artificial intelligence (AI) and machine learning (ML), and convergence with blockchain and the Internet of Things (IoT) will further drive the growth and adoption of this novel computing approach. Embracing this technology empowers organizations to gain a competitive advantage, foster innovation, and thrive in today’s rapidly evolving digital landscape.

FAQs

What is a real-time example of edge computing?

A real-time example of edge computing is autonomous vehicles. By leveraging edge computing, vehicles can process sensor data locally, enabling real-time decision-making without relying on a distant cloud server. This ensures quick responses, enhancing safety and reliability.

Why do we use edge hardware for processing data?

We use edge hardware for processing data to minimize the distance data needs to travel for analysis. By performing computations at the edge tools and devices themselves, edge hardware reduces latency, improves response times, and reduces dependence on centralized cloud infrastructure.

Which situation would benefit the most when using edge computing?

Situations that would benefit the most from using edge computing include scenarios where real-time processing and low latency are crucial. For example, applications in healthcare, manufacturing, and transportation, where immediate analysis, decision-making, and responsiveness are vital, would greatly benefit from the capabilities offered by edge computing.